kvcache-ai/ktransformers

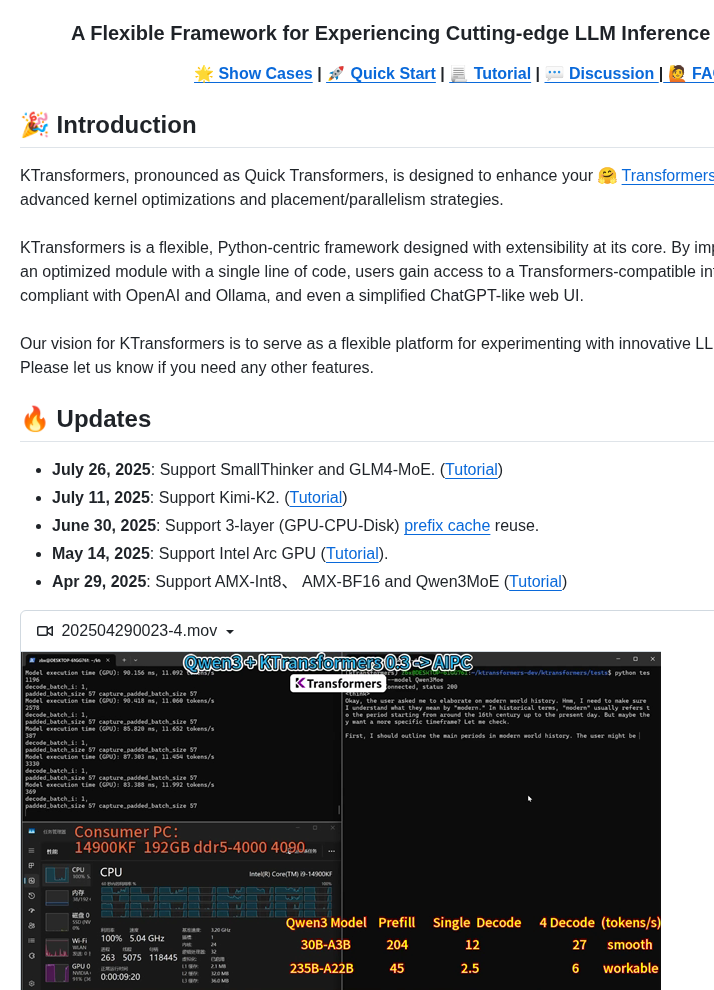

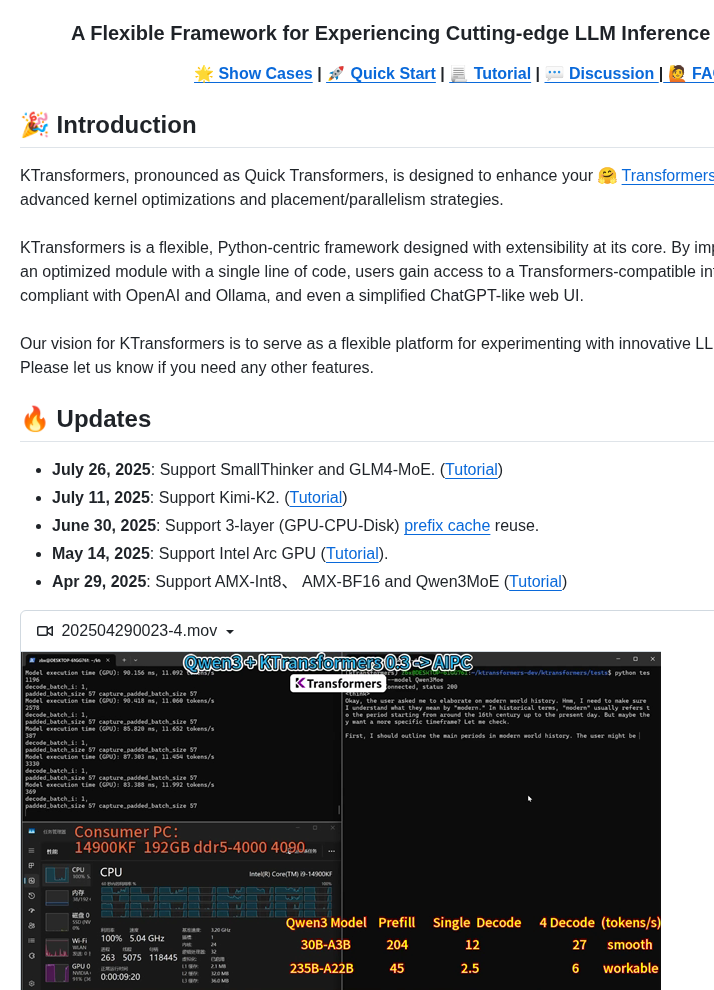

framework for fast, resource-efficient LLM inference on local hardware

View on index · View in 3D Map

// SURVEILLANCE FEED

Discovered repositories from the open source frontier

framework for fast, resource-efficient LLM inference on local hardware

View on index · View in 3D Map