Dao-AILab/flash-attention

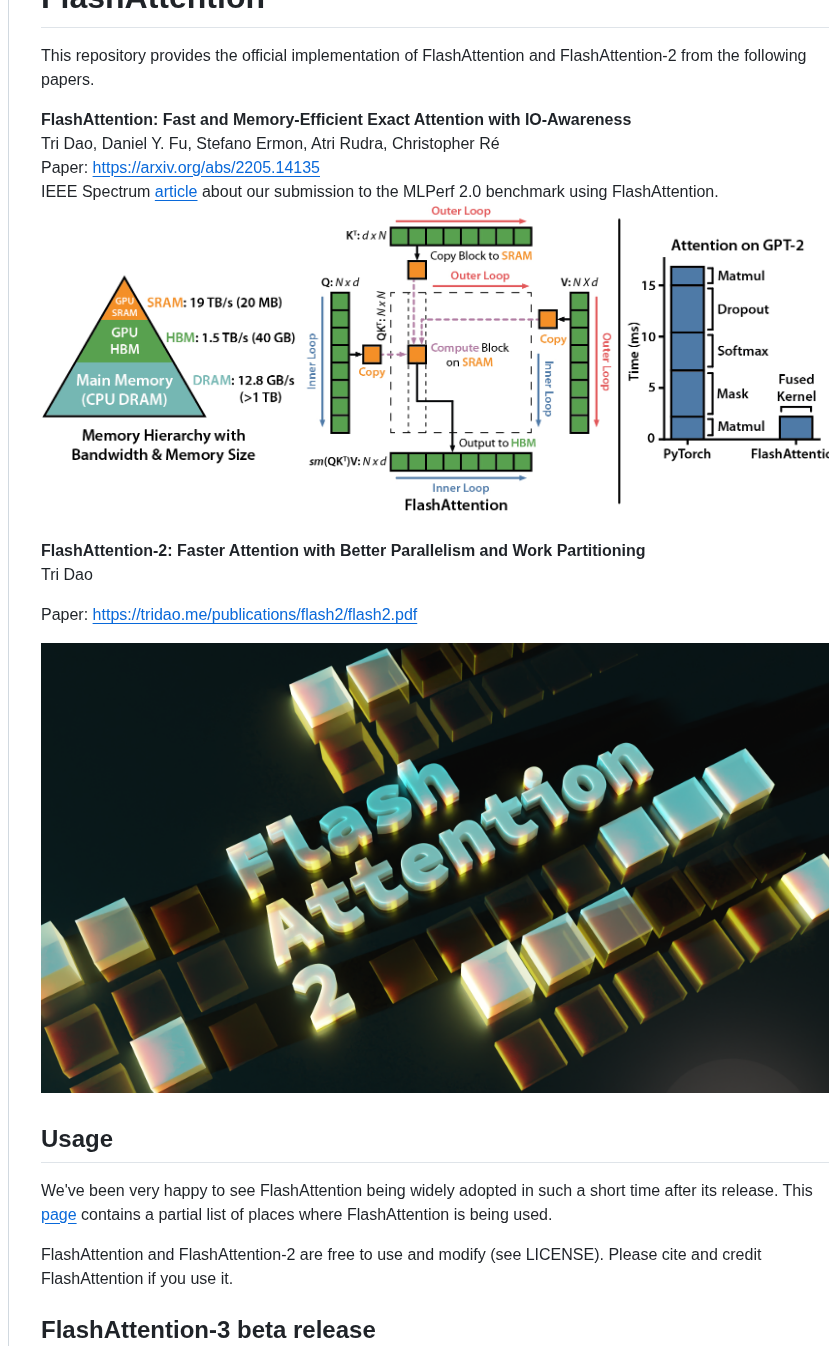

fast attention algorithm for transformers, boosts speed and memory efficiency

View on index · View in 3D Map

// SURVEILLANCE FEED

Discovered repositories from the open source frontier

fast attention algorithm for transformers, boosts speed and memory efficiency

View on index · View in 3D Map